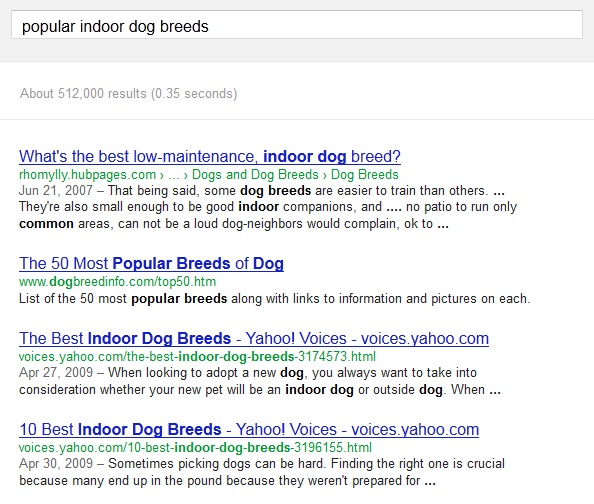

How do search engines pick your website to rank for the right keywords from among millions of other sites around the world? It seems like a perfect example of finding a needle in a haystack, but they’ve created hefty programs called spiders or bots to do just that.

Googlebot, Google’s indexing program, continuously crawls the Web updating Google’s database of websites. When it gets to yours, it will take cues from the site structure and content to determine what your site is all about. Today we’ll talk about how you can optimize your site structure for the best results when the search engine bots find you.

Navigation

A good rule of thumb is the easier and more intuitive your site is to navigate for people, the easier it will be for the search engine bots to determine what your site is about. Organize your pages into a hierarchy that groups together related topics. If you have a large site, Google may not get to every page. So make sure your most important content is in the first couple tiers of pages. Ideally, these pages will be accessible from the main navigation bar on your home page.

Links between pages are the paths users and bots use to get around your site. In addition to the primary navigation bar, links between pages help visitors find their way around and tell the bots a little more about what’s important on your site. Use descriptive keywords as anchor text for links between pages. These can include links within the body of your pages, footer links, and images you use as buttons.

You’ll also want to avoid having elements on your pages that bog down the load time, such as large image files and videos that play automatically. The bots will get tired of waiting and skip pages that take too long to finish loading.

Tip: If you have lower pages you feel are important to have indexed, link to them directly from your home page — either in text, in the navigation bar, or with footer links.

URL Structure

Do the URLs of your inner pages look something like this?

www.yoursite.com/index.php?option=com_content&view=article&id=8&Itemid=4

URLs with long strings of nonsense aren’t just annoying to people, they give nothing to search engine bots. Make your inner page URLs brief and helpful, like this:

www.yoursite.com/descriptive-title

URLs should also make it easy for people and bots to understand your site’s hierarchy. Include the paths in URLs for pages deeper in your site, thusly:

www.yoursite.com/descriptive-title/specific-topic

Tip: Use a hyphen (-) rather than an underscore (_) to link words in your URLs.

Redirects

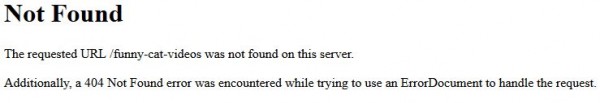

Duplicate pages and links that don’t lead anywhere can be detrimental to your search engine rankings. The solution is to use redirects to lead bots and visitors to where you want them to be. There are two kinds of redirects that will serve most of your needs — 301s and 404s.

301 redirects — These are useful when you have multiple versions of the same page either because you’ve moved your site to a new URL or your site can be accessed from multiple URLs (ex. yoursite.com, www.yoursite.com, home.yoursite.com). You simply need to add a piece of code to the old or duplicate page telling Google and browsers to go to the correct page instead. Most visitors won’t even notice they’ve been redirected. Click here for instructions for creating a 301 redirect.

404 redirects — When you delete or move a page from your website, by default visitors and bots who try to find that page’s URL will be taken to a dead end 404 error page. It’s possible to customize your 404 pages so they look friendlier and give visitors options to continue on your site. This gives you a chance to conserve any authority your deleted page had built up and keeps visitors and bots moving happily through your site. Click here for a good tutorial on creating a 404 page.

Tip: Sites with large eStores tend to delete and/or duplicate content frequently as products change. Make sure you have a system in place to implement appropriate redirects where needed.

Sitemap

Sitemaps are just what they sound like: maps of your site that make it easy for visitors or bots to find the pages they’re looking for. There are two main kinds of sitemaps — html and xml. HTML are more useful for visitors while the search engines like XML. You can add both or just one.

Keep in mind that just because you don’t have a sitemap doesn’t mean Google won’t index your site. If you have a large site, having a sitemap may get you indexed a little faster, and may even get a few more pages indexed. It’s worth the time to put one in.

Tip: You can get a free XML sitemap file made for your site at xml-sitemaps.com.